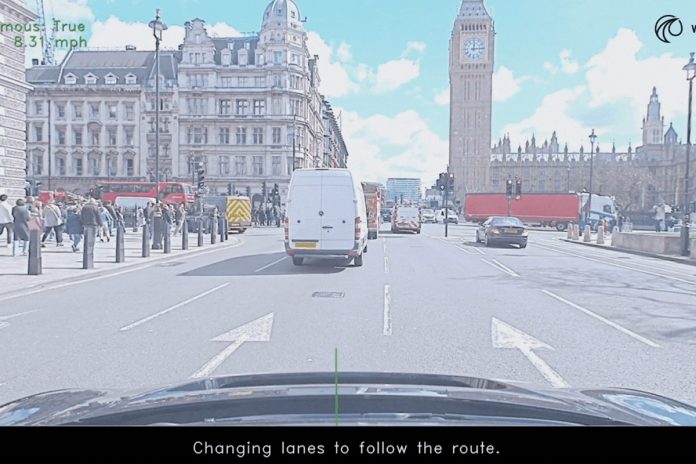

Wayve, the developer of Embodied AI for assisted and automated driving, has unveiled LINGO-2, its new closed-loop driving model that links vision, language, and action to explain and determine driving behavior. Through the model, Wayve is aiming to enhance control and customization across the autonomous driving experience. The company said that LINGO-2 also represents one of the first vision-language-action models (VLAMs) to be tested on public roads.

Building on its LINGO-1 research model, an open-loop driving commentator, Wayve’s new closed-loop driving model unites language with driving to provide visibility into its understanding of driving scenarios. Wayve’s AI driving models learn to drive through data and experience, without hand-coded rules or HD-Maps. Here, LINGO-2 combines a Wayve vision model with an auto-regressive language model (traditionally used to predict the next words in sentences) to predict a driving path and provide commentary on its driving decisions.

Tested in its neural simulator Ghost Gym, and on public roads, Wayve plans to use LINGO-2 to demonstrate the potential of aligning linguistic explanations with decision-making. The company believes that this integration could facilitate a new level of AI explainability and human-machine interaction that can build confidence and trust in the technology while also creating a more collaborative driving experience.