SoftBank has developed a traffic understanding multimodal AI designed to remotely support autonomous driving, operating on low-latency edge AI servers with the goal of achieving fully unmanned operations.

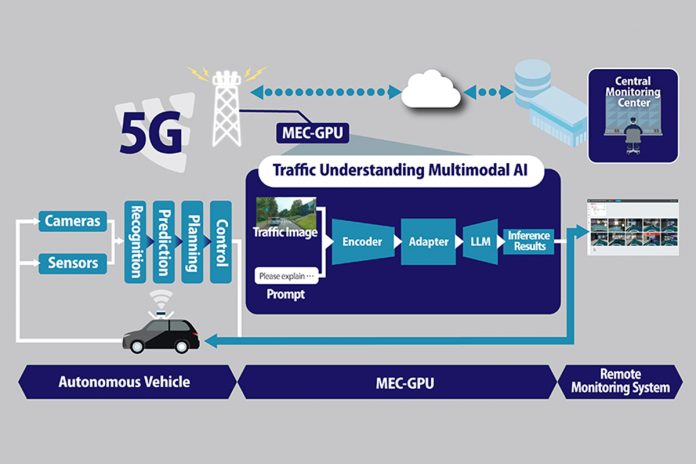

The company’s traffic understanding multimodal AI aims to address key challenges in the social implementation of autonomous driving services by enhancing vehicle safety, and reducing operational costs, through external support. By running the multimodal AI in real time on SoftBank’s MEC (Multi-access Edge Computing) and other edge AI servers with low latency and high security, the system enables real-time understanding of autonomous vehicle status, providing reliable remote support for autonomous driving.

In October 2024, SoftBank launched a field trial of this remote support solution for autonomous driving using the traffic understanding multimodal AI at the Keio University Shonan Fujisawa Campus (SFC). This experiment aims to verify whether the traffic understanding multimodal AI can provide remote support to autonomous driving, ensuring smooth operation even when vehicles encounter unforeseen situations that may impede driving.

The traffic understanding multimodal AI processes forward-facing footage from autonomous vehicles (such as dashcam video) and prompts about current traffic conditions to assess complex driving situations and potential risks, generating recommended actions to enable safe driving. The foundational AI model has been trained on a broad range of Japanese traffic knowledge, including traffic manuals and regulations, along with general driving scenarios and risk situations that are difficult to predict, as well as corresponding countermeasures. This training enables the traffic understanding multimodal AI to acquire a wide spectrum of knowledge essential for the safe operation of autonomous vehicles, providing it with an advanced understanding of traffic conditions and potential driving risks.

In this solution, camera footage from autonomous vehicles is transmitted in real time to MEC (Multi-access Edge Computing) via a 5G system. The traffic understanding multimodal AI, operating on GPUs (Graphics Processing Units), instantly analyzes potential driving risks based on the transmitted footage and other data, translating these risks and recommended countermeasures into language to provide real-time remote support for autonomous driving.

This process allows autonomous vehicles to continue driving safely, even in situations where they are unable to assess risks independently. Currently, remote operators issue instructions to autonomous vehicles based on information analyzed and verbalized by the traffic understanding multimodal AI. However, the core goal of this trial is ultimately to achieve fully unmanned operations by allowing the traffic understanding multimodal AI to directly issue instructions to the autonomous vehicles.

In the field trial conducted at SFC, one scenario tested involved driving in a situation where a vehicle is stopped in front of a crosswalk. In this scenario, there is a risk of overlooking a person attempting to cross from behind the stopped vehicle on the left, which could result in a collision with a pedestrian emerging from the vehicle’s blind spot as the autonomous vehicle approaches the crossing. According to Japanese traffic regulations, when approaching an unsignalized crosswalk with a stopped vehicle in front, drivers are required to come to a complete stop before proceeding forward.

In this case, if the autonomous vehicle approaches the crosswalk at high speed or fails to perform a stop, the remote operator must intervene to prevent an accident. However, since remote operators are monitoring multiple vehicles simultaneously, there is a risk that they may not detect dangers immediately or may be unable to intervene appropriately. To address this, the traffic understanding multimodal AI generates real-time information on the current traffic conditions, driving risks, and recommended actions to mitigate risks, issuing instructions directly to the autonomous vehicle. This enables effective remote support for autonomous driving from an external location.

In this field trial, when the autonomous vehicle approaches a stopped vehicle and a crosswalk, increasing the risk level, the system generates the instruction: “There is a vehicle stopped ahead of the crosswalk. Please come to a complete stop, as a pedestrian may suddenly appear.” This confirmed the feasibility of remotely supporting autonomous driving from an external location.

This remote support solution for autonomous driving is currently being utilized experimentally in trials using autonomous driving technology conducted by MONET Technologies. By continuously learning from unpredictable driving risks and recommended actions encountered in real driving environments, the traffic understanding multimodal AI’s accuracy will be further enhanced.

Moving forward, SoftBank will continue to promote research and development practices aimed at the social implementation of autonomous driving, working to address broader societal challenges through the technology.